Generative AI and Deepfakes – The future of fraud and extortion

The Asian Crime Century briefing 90

As new technologies are increasingly used across Asia there is growing use of some to conduct fraud and extortion. The increasing use of generative AI to create realistic digital images such as ‘deepfakes’, as well as expanding unregulated cryptocurrencies for illicit payments, have the potential to create a tsunami of new age fraud and extortion driven by new technologies. Considering that Asia has been suffering from a major fraud pandemic driven by industrial scale criminal enterprises based in Cambodia, Myanmar, and the Philippines, the greater use of generative AI is likely to help transnational organised crime groups expand even further.

Fraud is criminal deception for financial gain. Generative AI can provide new fraud tools to enable criminals to more easily deceive victims and evade detection. Such generative AI tools can include ‘deepfakes’, which are artificially created video or audio recordings designed to mimic real people for fraud or extortion. Deepfakes and other tools can be used for ‘synthetic identity fraud’ that combines real data with high quality fabricated information to create false identities that can be used to conduct unauthorised transactions. AI apps can create even better quality phishing emails and text messages that are indistinguishable from real bank communications. Long gone are the days of highly amusing ‘Nigerian letters’ that used unbelievable and poorly drafted language to dupe victims into advance fee frauds.

The growing use of deepfakes should be a major cause for concern. US firm Security Hero reported in the ‘2023 State of Deepfakes’ that the total number of deepfake videos online in 2023 is 95,820, representing a 550% increase over 2019; deepfake pornography makes up 98% of all deepfake videos online; 99% of the individuals targeted in deepfake pornography are women; and South Korean singers and actresses constitute 53% of the individuals featured in deepfake pornography and are the most commonly targeted group.

Creative Korea Deepfakes

Korea has been a leading Asian nation using new technology for business, but generative AI is having a social impact with increasing criminal uses. The Korean National Police Agency announced last week that they are currently investigating 513 cases of deepfake sex crimes. The number of reported cases has increased from 180 in 2023, 160 in 2022, and 156 in 2021. Most of the victims, 62%, are teenagers, and of the 318 suspects detained by the Korean police this year, almost 80% were teenagers. The increasing number of criminal cases and concentration amongst young people is raising widespread concern in Korea.

The deepfake problem in Korea involves widespread use of AI deepfake chatrooms on the Telegram app. The outcome of easy access to high speed Internet as well as the growing proliferation of AI apps has led to a preponderance of young men and boys using selfie images, mostly of girls, to create deepfake pornographic videos. The problem of the misuse of Telegram to share deepfake pornographic videos has become so severe that earlier in September Telegram’s East Asia representative apologised for miscommunications regarding the issue, removed 25 pieces of sexually exploitative material, and created a hotline for respond to enquiries from the Korean authorities.

The concerns of the Korean authorities that deepfake could be used more widely to target others in society was illustrated when the defence ministry announced recently that Intranet photos of soldiers, military and defence ministry officials have been made unavailable from the military's Onnara System intranet and the websites of military units due to concerns that they could be abused to make sexually abusive deepfake images. Previous investigations have found military intranet photos being used for deepfakes. The misuse of personal images to commit extortion is not new in Korea.

In November 2019, 25 year old Cho Ju-bin was found guilty of charges relating to being the mastermind of pay to view chatrooms on Telegram where users paid cryptocurrency to watch sexually exploitive content of women who were blackmailed into taking part. Cho was eventually sentenced to 45 years imprisonment. The case widened as investigations found multiple chatrooms.

In May 2020, 24 year old male Moon Hyung-wook was arrested and charged with coercing 21 women and girls into sharing almost 3,800 sexually explicit videos of themselves for distribution on a sexual exploitation chat room called Nth Room on Telegram. The Nth Room and other similar Telegram chatrooms, were operated as a pay-to-view online club offering abusive sexual videos of women and underage girls and are believed to have had around 260,000 users. Moon, whose user Telegram name was ‘God God’, was sentenced to 34 years imprisonment.

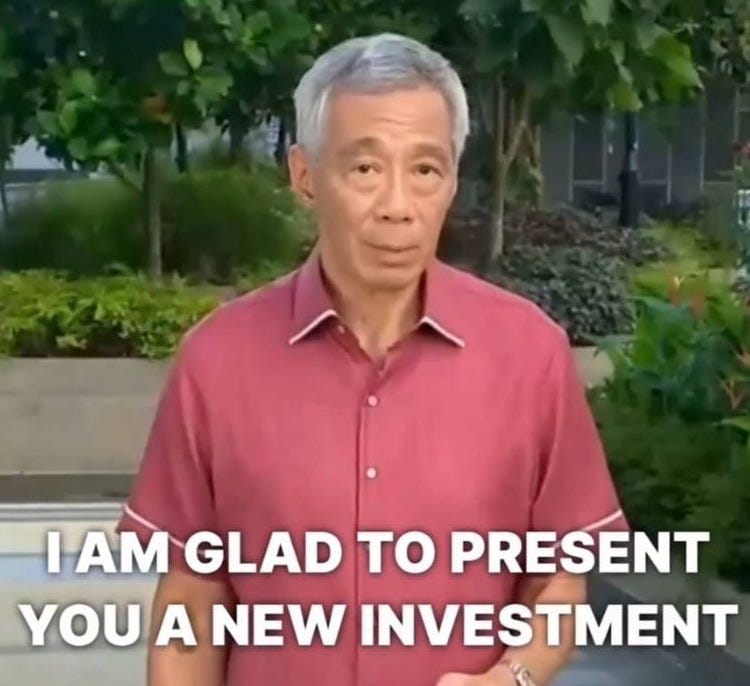

Uniquely Singapore Deepfake

In December 2023, a video of Deputy Prime Minister Lawrence Wong promoting an investment scam went viral on social media. The video included the logo of The Straits Times, a long established reputable news publication in Singapore, with Wong stating that “Dear Singaporeans, the day has come. I am pleased to introduce to you the Quantum Investment Project. Starting November 11th 2023 we have launched a project that will allow everyone to receive guaranteed monthly dividends. With minimal investment the automated trading system makes only the most profitable trades allowing investors to increase their income. Earnings from $8,000 are absolutely real. The company has made sure that this income is available to everyone. That is why we have reduced the minimum investment amount to $250. All payouts are guaranteed by me personally and my reputation.”

The video was a very high quality deepfake that included hyperlinks to websites seeking investment into investment schemes with guaranteed returns. The targeting of senior government officials who are widely trusted by the public continued in June 2024 when Senior Minister Lee Hsien Loong posted on Facebook to say that he was also being used for a deepfake fraud, saying that “You might have seen a deepfake video of me asking viewers to sign up for an investment product that claims to have guaranteed returns. The video is not real! AI and deepfake technology are becoming better by the day. On top of mimicking my voice and layering the fake audio over actual footage of me making last year’s National Day Message, scammers even synced my mouth movements with the audio. This is extremely worrying: people watching the video may be fooled into thinking that I really said those words.”

Please remember, if something sounds too good to be true, do proceed with caution. If you see or receive scam ads of me or any other Singapore public office holder promoting an investment product, please do not believe them.”

The deepfake videos of Deputy PM Wong and former Senior Minister Lee were of such exceptionally high quality that no ordinary person would be able to differentiate them from real recordings, except with reference to the context (i.e. why would such a senior government official recommend financial products?).

The concerns that criminal use of deepfakes can have a political impact, such as by lowering public confidence in officials, has prompted the Singapore Government to propose a new law banning deepfakes and digitally manipulated content during elections. The Protection from Online Falsehoods and Manipulation Bill proposes to prohibit the publication of digitally generated or manipulated online election advertising that realistically depicts a candidate saying or doing something that he or she did not in fact say or do. The new law only covers AI-generated misinformation that misrepresents election candidates and applies until polling closes.

Everyone Looks Real

In February 2024, the Hong Kong office of British firm Arup reportedly lost HK$200 million (US$25.6 million) in a fraud after an employee were fooled by a digitally recreated version of its chief financial officer ordering money transfers in a video conference call. A Hong Kong employee received a phishing email in January purportedly from the Arup Chief Financial Officer based in London, informing him that a secret transaction needed to be made. The Hong Kong staff member joined a video conference with others who he believed to be senior management of Arup, but who were digital recreations using deepfake technology.

Identity verification firm Sumsub claims that their client data shows AI powered deepfake fraud as the fastest growing means of identity fraud. According to Sumsub, “In 2023, deepfake technology continues to pose a significant threat in the realm of identity fraud. The widespread accessibility of this technology has made it easier and cheaper for criminals to create highly realistic audio, photo, and video manipulations, deceiving individuals and fraud prevention systems.”

The use of generative AI for fraud is not unique to Asia and it is growing around the world. But in Asia there is a transnational organised crime infrastructure that has generated billions of dollars in criminal proceeds in the past decade that has enabled the development of a resilient criminal infrastructure that national law enforcement agencies cannot defeat. This criminal infrastructure has been established in Cambodia, Myanmar, and the Philippines, and made use of banking facilities in financial centres such as Hong Kong and Singapore. Whilst there is growing enforcement action against parts of this transnational organised crime infrastructure, the massive proceeds of crime have already been diverted into other legitimate investments that can be used to mask new criminal ventures.

To address this threat, law enforcement agencies need a step change in collaboration with new cross jurisdictional multinational task forces focussed on high technology fraud. The private sector requires a transformation in its approach to financial crime prevention with far smarter use of AI technology that has a genuine impact on preventing crime and protecting consumers (not just making it harder for consumers to do their banking). Not only is the future of fraud in AI and deepfakes, but so also are some of the solutions.